The Hunt for a Better Search

Posted 1 year, 3 weeks ago.

Download Audio Version (16.4MB)

It was all Twitter’s fault.

To be clear, I don’t mean the version owned by the over-confident billionaire. It started well before that. The service had always been a minefield to work with. GIFwrapped had users that really wanted to be able to download GIFs from their timeline, but I’d never really managed to articulate my needs well enough to be granted API access.

So I had made do with grabbing the HTML source with a URLSession and inferring as much as I could from there. Eventually, of course, that ended up breaking… almost as if Twitter never wanted people to have unrestricted access to its content in the first place.

For a few years, I just nailed on new features, workarounds for this issue and that one, until the architecture was a mess of code with wacky bolt-ons for dealing with Twitter’s nonsense. It had to be, because my customers demanded it.

At the end of 2022, it all came to a head, and I broke. I was constantly dealing with Twitter breaking the insane web of code, and I needed something better designed to be able to deal with its nonsense. Something extensible. Something I could use to run live tests way more easily.

That would allow me to validate whether my code was still working, and ideally stay ahead of issues, as opposed to playing catchup every time they deployed updates.

So I began to pull apart GIFwrapped’s search architecture, and rebuild it from scratch.

I had a few really clear goals in terms of the new architecture.

- I wanted a system that allowed for extensibility from the get go. Even better if it was something I could potentially open up in the future, maybe allow others to plug in their own engines.

- I wanted something I could really easily test, both with fake data and live data, which would hopefully allow me to validate that things were working, regularly enough that I’d have a form of early warning system if things broke.

- I wanted it to use modern approaches, built in from the very core. Some other parts of GIFwrapped were already built on Combine, but I needed to skip past that and move right to structured concurrency, the clear and obvious replacement.

The good news is that I already had some understanding of what I needed to build. I’d sort of hacked in a solution to the existing mess of code that did the right thing, but it was a sort of side path to the actual search handling.

To understand that, we have to begin with the very basics: the text that a user will put into GIFwrapped’s search field.

Query

The search query is already a bit more complex than other implementations you’d find in most apps, in part because it also handles URLs as intended: by loading up the source at the given URL and parsing that for references to GIFs.

But the query actually goes beyond even that, and the simplified interface looks something like the following:

struct Query: Hashable, Sendable {

/// A special word, designated in the original string by a preceding bang (!),

/// typically used to select an alternate search engine to handle the query.

var command: String

/// A table of keys and values, where the value is the first defined for that

/// key in the original string. These are defined in the format `{key}:{value}`.

var parameters: [String: String]

/// The original search string, with the `command` and `parameters` removed.

var term: String

/// The search term, parsed as a URL and validated to ensure that it has both

/// a protocol and a host, otherwise `nil`.

var url: URL? {

switch URL(string: term) {

case let url? where url.scheme != nil && url.host != nil:

return url

default:

return nil

}

}

}

I think this is mostly self explanatory, and if you’ve used GIFwrapped, you might have actually seen this in use before.

The Photos support, since waaay back when I combined the tabs into one single interface back in the day, has used queries that look like !photos is:live to pull in live photos and burst images from the Photos app.

But also, you’ve almost always been able to select an alternate search engine. At one point it was the only way to do so. If you wanted to send a query to Giphy instead of Tenor (the current default), you could search for !giphy hello world and it would route it correctly.

So if we’re going to have multiple sources accessible via this query, we’re going to need multiple things to automatically handle a query: one for Tenor, one for Giphy, one for Photos, etc.

So that naturally leads us right into…

Engine

So in terms of our architecture, an Engine is going to be something that takes a query, and returns… results, right? If we assume that a Result is a structure that defines details about a specific GIF—its name, dimensions, location of the actual file—then our protocol for an Engine might look like this:

protocol Engine {

func results(for query: Query) async throws -> [Result]

}

struct Result: Codable {

var name: String

var width: Int

var height: Int

/// ...you get the picture.

}

This makes sense as an API, and it’s actually how the old system worked (except that it used a completion handler). The only issue is that every engine then has to serve up its GIFs in one bundle, and if for some reason changes to the result set came through, it’d stop right here.

Wait… changes? To search results?

Remember how GIFwrapped’s Photos support is actually just part of search? Well, yeah… its results might actually change over time, because a query like !photos is:live is just returning all the Live photos, and if the user takes another one while the search is active, we’d have the ability to update our results to show it… as long as those results are monitoring the Photos API.

So instead, what if we return a structure that can output a stream of results? If it just returns a single set, that’s fine, but a solution like that would also support things like additional results coming through over time, or just updating the results.

So that would mean we end up with something like the following:

protocol Engine {

func results(for query: Query) -> AsyncThrowingStream<[Result], Error>

}

The next thing to consider is pagination. GIFwrapped already has a solution that allows it to detect that the user is scrolling towards the end of the collection view, and the UI layer can then request the next batch of results to append to those already on screen, making it feel like the list never ends.

The problem we have is that different engines implement pagination in different ways. The most common is a simple integer value representing the offset, but for some (like Tenor) it’s a string cursor that isn’t a value that increments in any obvious way. The only way to get the next cursor is from the last set of results.

Fortunately, these two solutions can be solved with the same process. We allow the underlying engine to return a cursor for the next page of results, and all the UI layer has to do is return the value it got most recently.

So first we define our Pagination value as follows:

enum Pagination {

/// Results offset by the given integer.

case offsetBy(Int)

/// A string that represents a position within a result set, which is used by

/// some engines (like Tenor).

case cursor(String)

/// The first set of results.

static var start: Self { .offsetBy(0) }

}

This defines something we can use for all initial requests (.start), and after that our UI can simply use whatever case the engine returned last. The engine itself is the only one who needs to know or care about what the actual value is. The rest is just passing around or storing the most recent value.

So we do actually have to have some mechanism to return the pagination, and we can do that by wrapping our array of Results in a structure.

protocol Engine {

func results(for query: Query) -> AsyncThrowingStream<ResultSet, Error>

}

struct ResultSet: Hashable, Sendable, Codable {

/// The actual results returned for the query.

var results: [Result]

/// The total number of results for this query, including other pages, if

/// that's something supported by the engine.

var total: Int?

/// The value to use to retrieve the next page of results.

var next: Pagination?

}

This also allows us to pass back some other useful metadata, like if the engine knows the total number of results available for that query, it can be returned and we can then display it in the UI. Not all engines support it, unfortunately, but it’s always nice to have.

So now we just need to be able to send that pagination into the engine, and we’ll do that by putting the Query inside a Request.

protocol Engine {

func results(for request: Request) -> AsyncThrowingStream<ResultSet, Error>

}

struct Request: Hashable, Sendable {

/// The parsed query string.

var query: Query

/// The desired number of results for engines that support pagination.

/// Considered to be a guide, not a rule.

var numberOfResults: Int = 50

/// The requested pagination.

var pagination: Pagination = .start

}

Aside from being able to specify the pagination value, this allows the UI to request a specific numberOfResults, perfect for ensuring that each new page gives just enough to scroll through before fetching another page. Again, not every engine can support this, even if they support pagination, so it’s never assumed to be a hard rule, but it does allows for the UI to inform the search engine of the preferred number of results based on the screen size.

Perfect.

Except, no. The biggest downside we now have is that each engine is totally siloed. They all perform their own networking, and while we can choose one to perform a query, it’s actually difficult for us to differentiate between URLs, which is a core part of this whole system.

It would be trivial to build a HTTPEngine to reach out and fetch the contents of a page at a given URL (and in fact, I totally have), but then that HTTPEngine is going to need to be able to parse any response or data structure that gets returned. Don’t get me wrong, we could implement something as a fallback (and again, I totally have), but it’s useful to be able to have something a little more fine-tuned.

Parser

Okay, so what we need is a new protocol that can first determine if it’s able to process a URLResponse, and then… process it.

A little something like:

protocol Parser: Sendable {

func canParse(_ response: URLResponse) -> Bool

func parse(_ data: Data, with response: URLResponse) throws -> ResultSet

}

Actually integrating this with our system is the next step. If we do indeed have a HTTPEngine, that could just iterate through the available parsers to find the right one to handle, but… what if someone enters a Giphy API url into the search box? It’s a crazy idea, but it’s technically possible. And it gets more complex as I start to build out more and more engines. If the underlying code is trapped inside the engine, then the HTTPEngine won’t necessarily be able to access it.

The obvious answer (stop yelling it at me) is to make our engines also parsers, and we can totally do that, but we can go a step further. If we entirely separate the lookup and parsing responsibilities, we can actually make it so that any engine can use any parser.

So instead of our engine returning a straight-up stream of ResultSets, let’s start by returning something that we can customise a little more. I’m going to call it a Handler because I’m feeling unimaginative, and all it’s going to do is provide a wrapper for initialising our AsyncThrowingStream.

It looks a little like this (and just to be clear, I’m going to focus on just one init for this example because it’s already complicated enough.):

protocol Engine {

func handler(for request: Request) -> Handler

}

protocol HandlerContinuation {

func yield(_ resultSet: ResultSet)

}

extension AsyncThrowingStream<ResultSet, Error>.Continuation: HandlerContinuation {}

struct Handler {

private var handler: @Sendable (

_ environment: Environment

) -> AsyncThrowingStream<ResultSet, Error>

init(

_ handler: @Sendable @escaping (

_ environment: Environment,

_ continuation: HandlerContinuation

) async throws -> Void

) {

self.handler = { environment in

AsyncThrowingStream { continuation in

Task {

do {

try await handler(environment, continuation)

continuation.finish()

}

catch {

continuation.finish(throwing: error)

}

}

}

}

}

func callAsFunction(environment: Environment) -> AsyncThrowingStream<ResultSet, Error> {

handler(environment)

}

}

Alright, alright. Now you’re all yelling. Half of you are grumbling about how complex this is, and the other half are complaining about how I haven’t explained the Environment I snuck in there.

Before you all light your torches, though, lets look at an example usage for it.

struct ExampleEngine: Engine {

func handler(for request: Request) -> Handler {

return .init { environment, continuation in

guard let url = request.query.url else {

throw SomeError.missingURL

}

let urlRequest = URLRequest(url: url)

for try await (data, response) in environment.data(for: urlRequest, from: request) {

let resultSet = try SomeParser().parse(data, with: response)

continuation.yield(resultSet)

}

}

}

}

Herein lies the simplicity amongst the chaos. The implementation is crazy and complicated, but I have found that complexity isn’t always bad, nor is it always avoidable. Where you choose to put that complexity is the key, and if you can make an API that feels simple and unadorned, you’re already winning.

One nicety that this API provides is that I can just throw to bail out when an error occurs, and I can just return to finish the stream. The engine doesn’t need to know anything about the implementation, I can just dump results into the continuation as it gets them.

The most important part here is that the Environment takes away some of the heavy lifting, giving us a pretty simple mechanism for pulling in the data.

What this does is give us a bonafide way to insert testing mechanisms into the structure. Instead of actually performing a URLRequest (which yeah, we could fake with URLProtocol), we can just spit out pre-recorded data directly into our setup and get ourselves some sweet, sweet regression testing.

With a little work, we could even use the tests to record the data for the tests (no, that is not a typo) which is actually what I do in the production version of this code (but it’s a huge detour, so let’s leave that for another day).

For production purposes, though, it doesn’t need to do much.

protocol Environment: Sendable {

func data(

for urlRequest: URLRequest,

from queryRequest: Request

) -> AsyncThrowingStream<(Data, URLResponse), Error>

}

struct ProductionEnvironment: Environment {

var urlSession: URLSession

func data(

for urlRequest: URLRequest,

from queryRequest: Request

) -> AsyncThrowingStream<(Data, URLResponse), Error> {

.init { continuation in

Task {

do {

if let (data, response) = try await urlSession.data(for: urlRequest) {

continuation.yield((data, response))

}

continuation.finish()

}

catch {

continuation.finish(throwing: error)

}

}

}

}

}

Which looks great, but… why does the data(for:from:) method return an AsyncThrowingStream, of all things?

Well, it’s because of those pesky URL queries.

Handling URL-based queries

Most of the basic engines are easy to take care of, and you’ve likely handled APIs just like theirs. You make a request, and then get back a JSON response. Easy squeezy.

URLs are less easy. I mean, sure, you can still make the request and get back something you can parse, but it’s not always that easy, which is where we loop back to Twitter. At some stage, well before it changed hands, Twitter decided to go all in on JavaScript for lazy loading content, the result of which was that the GIFs I needed to find… weren’t there.

Sure, the content would eventually be there, if I opened a URL in a browser window, but URLSession doesn’t know that. It’s using HTTP for a one-and-done call, and the JavaScript isn’t even on the table.

But a WKWebView…

Look, I’ll stop beating around the bush. This is the part where I decided to write (and eventually open-source) a wrapper for a hidden WKWebView that can return a response to a URLRequest with multiple responses, one for each time the DOM is mutated. And since we’re going to that length, there’s nothing to stop us from hooking into XHR responses for something a little more JSON-y.

So now we have a way to bypass Twitter’s nonsense, and the best part is that there’s actually a private JSON API being used to provide the content, which is great. I mean, it’s a terrible API, basically a straight GraphQL dump, but the content is there.

But it’s a private API, and before we consider going down that path, we should really stop and think about what we’re doing. This isn’t like some private iOS API, where it’s likely to be unchanged for months between iOS releases. This is a private web API which could literally change every Tuesday. It’s not a showstopper or anything, it just means we need to be careful and prepare for the worst. So we do our best to parse things in a fault-tolerant way, and we fall back to the HTML if it does break.

Because they wouldn’t both break at the same time, right? RIGHT?

So if we update our ProductionEnvironment a little, we can now use a WebKitTask to handle our data loading, and it actually has some automatic handling for when the initial response isn’t something that will require a WKWebView. Which means the implementation can look like this:

struct ProductionEnvironment: Environment {

var urlSession: URLSession

func data(

for urlRequest: URLRequest,

from queryRequest: QueryRequest

) -> AsyncThrowingStream<(Data, DataResponse), Error> {

.init { continuation in

Task {

do {

for await payload in try await WebKitTask.load(urlRequest, using: urlSession) {

continuation.yield((payload.data, payload.response))

}

continuation.finish()

}

catch {

continuation.finish(throwing: error)

}

}

}

}

}

And now we can set up multiple parsers for handling the response from a Twitter page load, one for handling the HTML, one for the private API response, etc. This allows us to keep all those parsers nice and self-contained, and all they need to know is what the response would look like.

Tying it all together

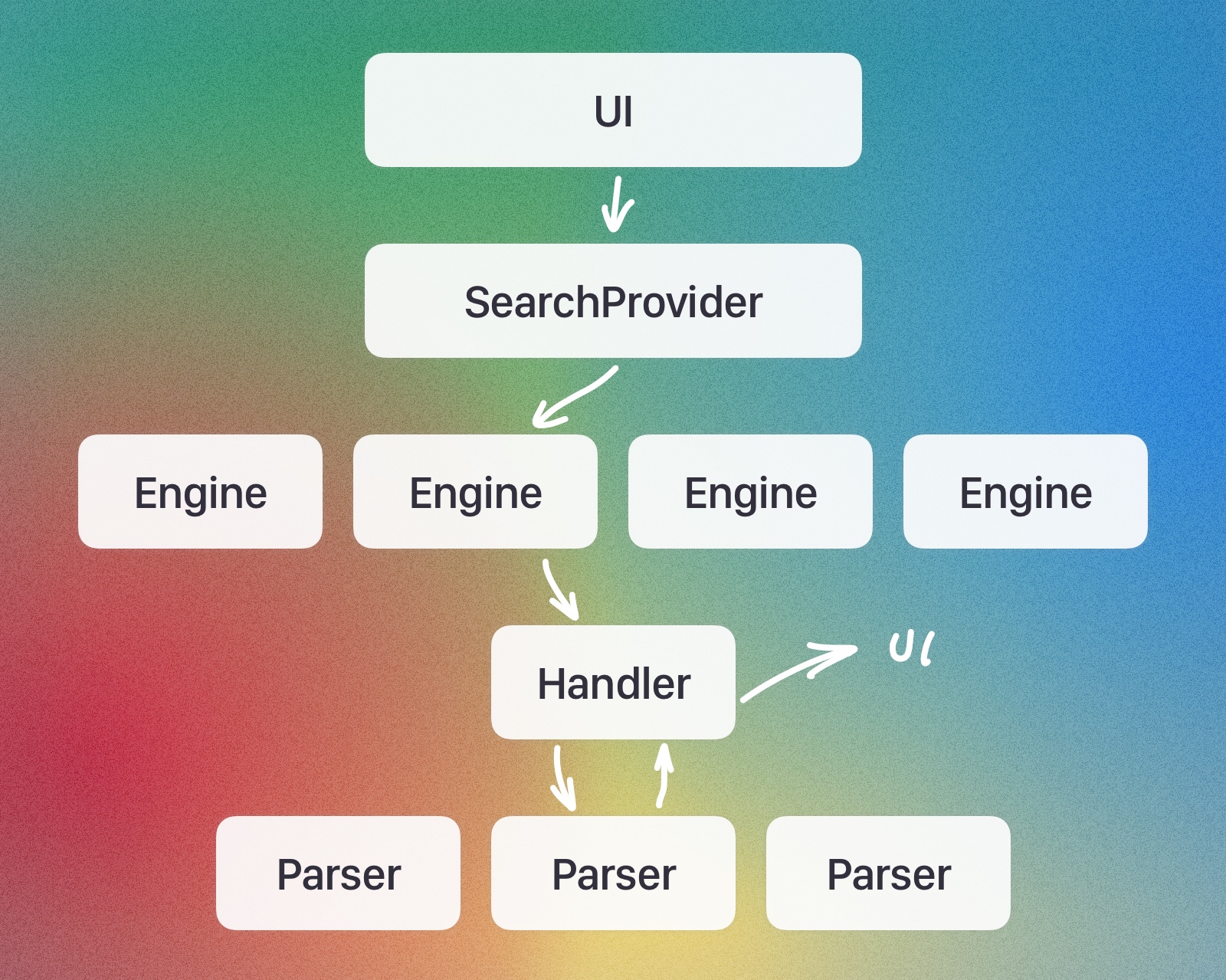

Alright. We have Engines that can take a query, and return a Handler which can, in theory, defer any processing from Parsers needed to produce a result. It could also just, you know… produce a result of it’s own accord.

But we need to bring it all together into some sort of API, one more like the original Engine definition we came up with. We want to be able to pass in a query, and for that query to be processed into actual results. Right now we’ve got a few holes.

The first of those holes is how we actually handle allowing all of our various Parsers to have a go at processing the content. We can obviously get a specific parser to do it, if we know what parser we want to use, but what if we don’t?

We can solve that by extending our Environment.

protocol Environment {

var parsers: [any Parser] { get set }

}

extension Environment {

func parse(

data: Data,

response: URLResponse

) throws -> ResultSet {

let parser = parsers.first { $0.canParse(response) } ?? HTTPEngine()

return try parser.parse(data, with: response)

}

}

Now if we add the parser property to the ProductionEnvironment structure, we’ll have a method we can call to access any parser registered with it. This allows for any Engine to just ask for its response to be parsed, and if the right Parser exists, it’ll just work.

Of course, the eagle-eyed amongst you might have noticed the HTTPEngine being used as a default for this call. That works because HTTPEngine is also a parser that just returns true for canParse(_:), and it just looks for URL-like strings within the content, using a really, really complicated regular expression.

You know, as the fallback that I mentioned earlier?

So the final piece of the puzzle is to actually be able to call into this mess, and for that we have the SearchProvider.

actor SearchProvider {

var environment: any Environment = ProductionEnvironment()

var engines: [String: any Engine]

var fallbackEngine = HTTPEngine()

var parsers: [any Parser] {

get { environment.parsers }

set { environment.parsers = newValue }

}

func results(for request: Request) -> AsyncThrowingStream<ResultSet, Error> {

.init { continuation in

Task {

do {

let engine: any Engine

if request.query.url != nil {

engine = fallbackEngine

}

else if

let command = request.query.command,

let requested = engines[command]

{

engine = requested

}

else if let preferred = engines["tenor"] {

engine = preferred

}

else {

throw .invalid("Unable to find an engine to process query.")

}

let handler = try engine.handler(for: request)

for try await resultSet in handler(environment: environment) {

continuation.yield(resultSet)

}

continuation.finish()

}

catch {

continuation.finish(throwing: error)

}

}

}

}

}

And that’s it, or at least, it’s the highest possible level version of it. One single interface to hook into, and the rest of the system handles doing the work to figure out what the user wants, where to get it from, and how to process the results.

Obviously this post is missing so so many details, including actual Engine and Parser implementations, but those are honestly the easy part. I also haven’t spoken at all about how to actually implement the testing harness, but hopefully you can see how the Environment makes that a piece of cake. I can easily run smoke tests against canned and live data with very little effort.

A simplified visualisation of how we move through this new architecture.

Overall, I’m really really happy with this solution. It presents as a simple API, and its complexity is tucked away, separate from the rest of the app. This ensures that going forward it’ll be more maintainable and easier to extend and build upon.

But it’s also more reliable, and that is good for both myself and GIFwrapped’s users.

Maybe I’ll even get around to making it possible for other developers to make Engines or Parsers for it.

Someday.

Thanks to Casey Liss, Dave Wood, and Ben McCarthy for helping me by proofing this post and making sure it, you know… makes sense. Y’all made me look at least 20% less crazy. Thanks!